Dev Logs: Darkwood (WIP)

I decided that i'll try to reproduce the gameplay of one favorite Horror Game wich is Darkwood developed by Acid Wizard Studio.

I've fallen in love with it's dense and creepy atmosphere, it's unforgiving gameplay. I thought it would be an interesting challenge to reproduce such mecanics in a 3D worldspace.

Prototype Scope:

First of all I had to define the goal of my prototype, I won't launch myself alone in a years longs project. In my opinion the main point of Darkwood is about the exploration of dangereous area with limited informations about our surrounding to fing ressources and equipements.

So i decided to develop these systemes:

-

Dynamic Vision using the character field of Views.

-

Optional feedbacks.

Character World Spaced Motion

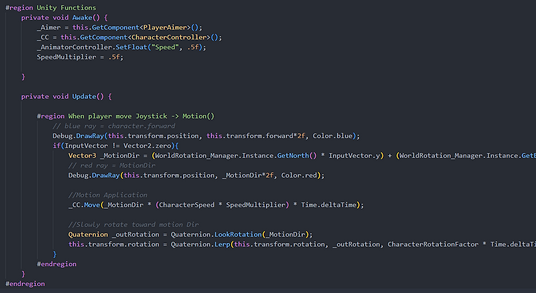

I begun my journey by downloading a humanoid 3d model and movement animation at Mixamo.com. I started by creating an empty GameObject parent of my camera with a script returning his forward and left vector. Therefore this object will become my world orientation reference, because i need my character to always move in the same direction when my player pressed one of his motion input (when UP is pressed -> character move upward from my camera perspective).

Then I Create a simple Mover Script connected with my Unity New Input System to move my characters in said space. I also created update my character rotation to a quaternion Lerped between my actual rotation to my motion direction to slowly rotate to my motion direction.

I also chose to use the Character Controler component to move my Player Character by curiosity because i've never used it on a project before and because I don't think I'll need a deeper physical controller later on.

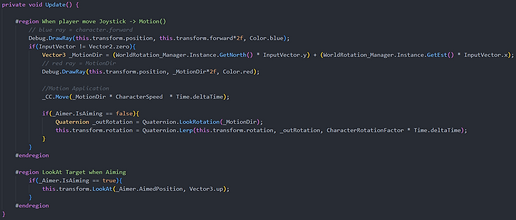

Next i need before adding weapon system, shoot, and function I need the ability to aim. Therefore I created a new Input Event and bind it in my script. Now when my right click is pressed, my character will face the my mouse position in the world while still moving along my camera axis.

I had to modify the rotation part of my script to only apply when my player is not aiming.

After that I blended my motion animations that will be linked with my to the direction of my motion. Wich mean that I'll set a mathematical value to my MotionX (float blending forward motion, 1 mean forward, -1 mean backward) as such:

MotionX = Vector3.Dot(this.transform.forward, MotionDirection)

Which can be roughly translated as if my world space motion/player inputs is matching my character object forward then i play the my walking forward animation.

This systeme will work in any case even if i rotate the world space referencer and my camera.

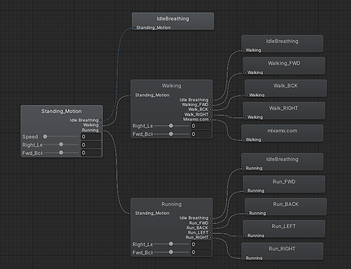

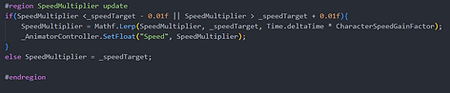

Next I created a speed variable in this Animator Controller and a running Blend Space, this variable will Lerp over time between 3 values (0f = immobile, 0.5f = walking or crouching, 1f = running) depending on my player state.

This variable will also be used as a motion speed multiplier to create a smooth start and stop transitions.

I've also added ChangeState and Sprint functions linked with my New Unity Input Systeme, a new Crouch blended motions and transitons between Standing and Crouched.

This systeme should be enough for my prototype's scope. So I probably wont push the movement system futher unless another systeme enter in conflict with this one or cause bugs later during the developement.

Weapon Systeme

First of all, i've downloaded some weapons meshes and weapons handling animations on mixamo.com that will be used to realize this system.

I quickly realized that to easily deploy new weapons quickly, I would have to use C# inheritance. I also made the decision to put all my variables requiered in my Weapons classes. As for the variables related to the Weapon's characteristics, I chose to contain them in a ScriptableObject. This architecture allows me to quickly iterate in my weapons, even create weapon profiles that will be integrated much later.

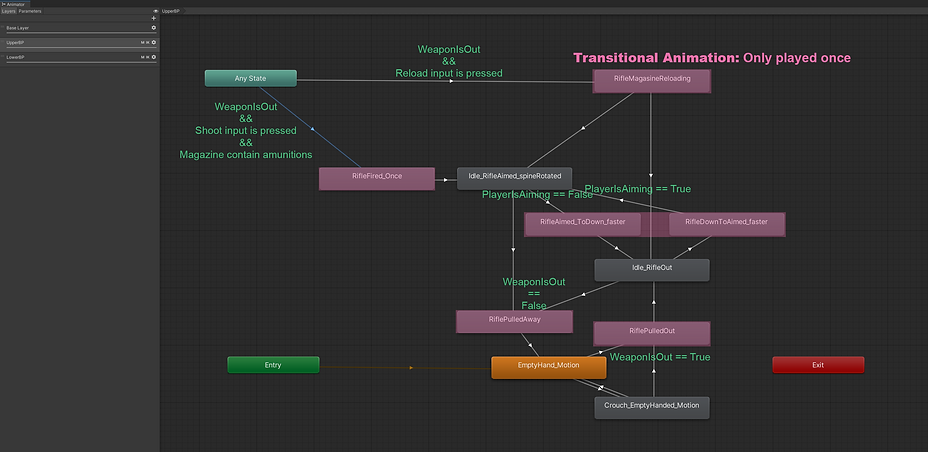

I then modified the Animator Controller's Layer containing the animations of the upper boddy parts of my avatar’s body as well as some variables handeling the transitions.

For this, I linked my previous scripts to the updated Animator Controller.

I then created a WeaponManager script that will be affixed to my Player Character and connected to my new InputManager's functions.

-

ShootInput(): connected to the left mouse click, this function will launch the StartShooting() and StopShooting() functions from the Weapons classes

-

ReloadEquipedWeapon: connected to the R key on the keyboard. This function launches a coroutine which fill the magazine of the weapon after a defined amount of time (inventory and ammunition not yet implemented).

-

ChangeWeaponDrawState(): connected to a temporary key, change the value of the weapon state variable (drawn/stored) and play an animation accordingly. These two animations contain scripted events that dynamically change the weapon's parent the weapon to a pivot in its back or in its dominant hand.

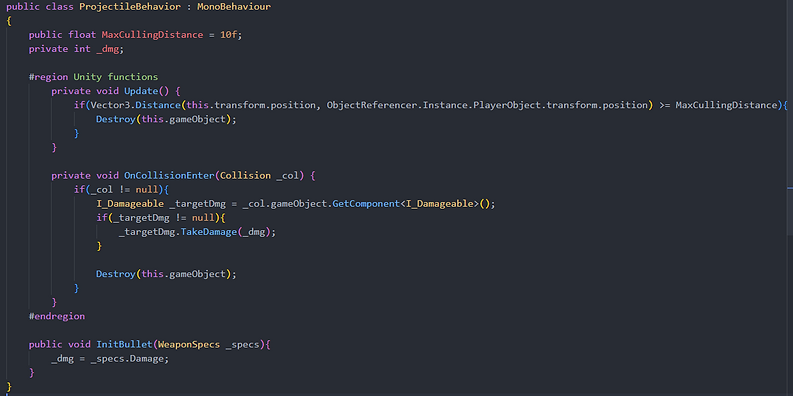

I've created a simple script that will handle the possible interactions of the projectiles. Thus the projectiles will self-destruct after a certain distance crossed or at the impact of a surface, an object or an agent.

In addition, I have established an I_Damageable C# interface to unify the damage application, so by a single line of code, my weapons can affect any type of objects containing this set of functions.

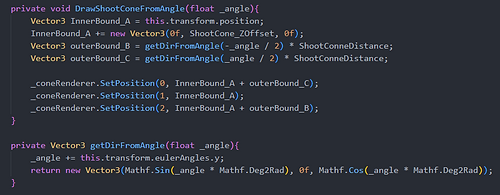

And now: Fire Spread: As in my inspiration, I wanted create a cone of fire when the player is aiming that will tightens gradually before opening when the player shoot with his weapon.

So I updated my PlayerAimer script to display a LineRenderer when the player is aiming with a weapon equipped. For this, I decided to draw this cone from a single Spread value that will define the angle formed by this "triangle". Using only one value allows me to easily modify it at runtime as mentioned earlier.

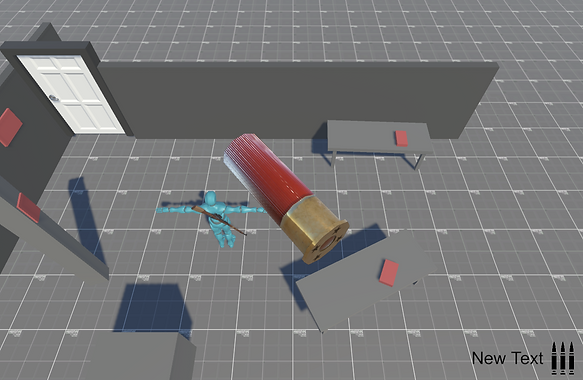

Finally, after the addition of some VFX, the integration of an ammunition counter in the UI and tweaking of my weapon's values. This is the result still imperfect but functional.

Character Interact with Objects

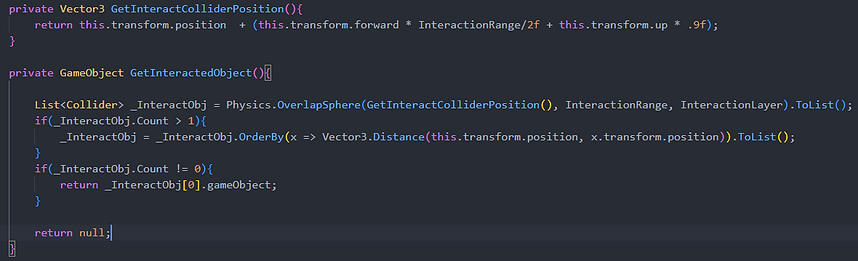

To set up this mechanism, he first had to found a way of discriminate between objects to find the one which my player wish to interact with.

To do this, I've made a simple function using a SphereCast on a separate layer, containing only Interactable objects.

Then, in case I come to have several eligible objects, I will order them according to their distances to the Player Character to select only the nearest object.

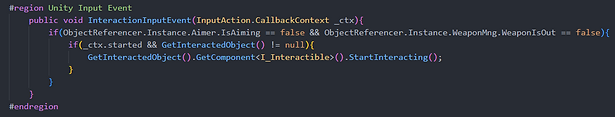

I then created a new Event in my InputManager, which is temporarily linked to a keyboard key (E) that will call these functions. I have also created an Interactible I_Interinterface that will be used to launch my interactions from a single function for any type of Interactable objects that I will develop.

Once this unified system is established, I chose to set up a simple interaction to test its reliability. So I created a door mesh that will be connected to a simple Animator Controller, moving it from open to close state to let the player pass through when he interacts with it.

For this, my StartInteracting() function will make the door non-interactive until further notice in addition to launching an animation trigger to change state.

I have also created an Animation Event, which will be called at the end of the transitions animations, which will make the door interactable again to prevent the player from interacting repeatedly with it.

Now that my system is functional, I’m going to try something a little more complex. One of the dynamics of horror games, which I particularly like, is the investigation and the look out for clues in the environment.

Since I couldn’t import 3D objects directly into my Canvas, I had to look for a different process that would simulate this possibility.

So I chose to calculate a virtual image of the object in question using a second Camera and a Render Texture. The trick here was to place this camera as well as the object to render on another Layer different from my gameplay Layers. So my gameplay camera controlled by the player will never display these elements and my rendering camera will never display other objects related to the game space, despite that they are both in the same scene.

This cartridge is a texture present in my Canvas, while at the same time being a 3D object present in my scene as suggested by the presence of illumination on it.

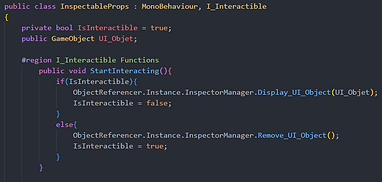

Now that I have a display process, it’s time to dynamically choose the object that will be displayed. I have therefore taken over my I_Interactible C# interface in an inspectable object script that will start/stop the inspection process and contain the reference of an object Prefab to display (I chose a Prefab rather than a copy of the Mesh of my object to be able to easily choose the Transform of the object and, because I had an idea in mind that we will discuss later). I have chosen to contain the code of this interaction in another object that will be referenced by my Singleton GameManager.

Script that will start and stop the interaction with the inspector.

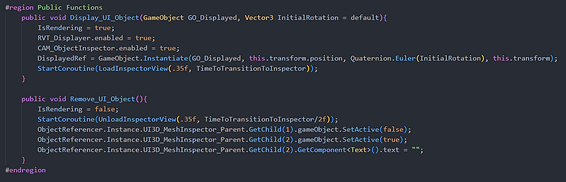

Script of the inspector, will display the object passed in set on the rendering camera layer.

It also contains some features that will gradually add a little post process to improve the rendering of my feature.

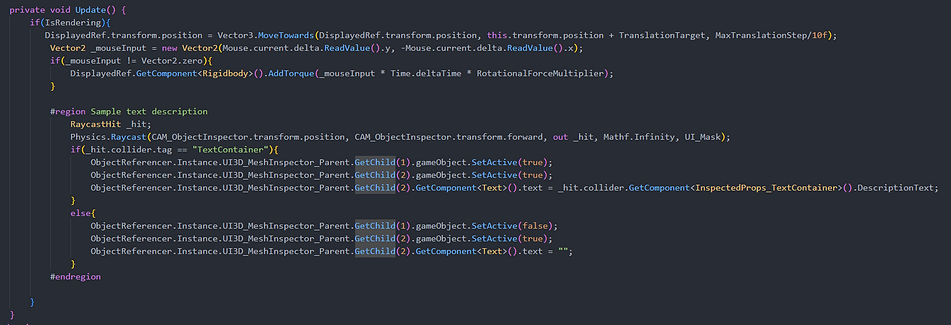

Now that I am able to dynamically make these objects appear, I will start to manipulate them. So I will, for the time of this interaction, override my player’s inputs:

-

My movement keys will be used to move the object from top to bottom and from right to left.

-

The movements of the mouse will be used to orbit the object to see it from every angle.

I will then create a few collision boxes on my object that will contain description text. So, by pulling a Raycast from my camera, I will test their presences and display them over my UI text object.

Feedbacks - Looking At Interactible Objets

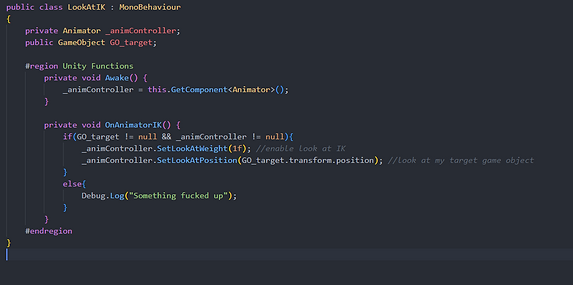

Early during the development i've came accross the Unity Iverse Kinematic and having worked with third partie alternatives i decided to give it a try.

So, in order to give to the player an hint about what will be interactable, i'll overide my character's head orientation to look at those interactable items

To test the feature I started with a simple look at object, wich seems to work just fine:

So, being onely able to look at one object at a time i had to find an algorithm to define which one will it be. First of all to simulate of field of view I start to find all object on my Interactible Layer in range.

I also tested, using Raycast, that each and every Interactible Objects are directly accessible and not obstruct via walls nor surfaces.

Then to simulate a field of view apperture, I had to compare the angle between each Interactible objects and the player object to the player forward. So if the dot product of that is above .5f it must mean that this object is mostly in front of my player.

I finaly sorted all available objects by distance to player object; and define the closest as my Look IK target.

Next I'll use that function on my script's update to check if I find an available Interactible object. If indeed i do I'll slowly move my Look target toward it over time. If none are found, that Target will move in front on my player object.

_PNG.png)